Applying Thompson Sampling To The Interview Process

(Image by Macoto Murayama)

NOTE: This post is not necessarily advocating for this approach. I think it’s an interesting idea so I’m putting it out there. This approach, as is, does not account for subjective biases, but it could provide the data to measure and compensate for them.

One of the problems I’ve observed with interviews candidates across several companies is their informality. It often feels more like reading tea leaves than finding the best person. While many companies attempt to make this more rigorous, they often just end up doing the same informal thing at the end – “Did we all generally like this person and feel good about them or not?”

A process that is so informal and inexact is also impossible to improve upon. No real data is collected, and no real feedback is received. Companies that do collect data typically wait until they’ve collected a lot of data before analysis, potentially passing up great candidates along the way.

As a thought experiment, could we use some statistical tools to make this informal process more rigorous, provide a basis for continual improvement, and increase the likelihood we choose the best candidates along the way?

Interviews And Medical Trials

Luckily for us, medical science was forced to ask a similar question ~85 years ago. The set up is simple:

- You have two treatments, A and B. Maybe placebo (A) and experimental cancer treatment (B).

- You are not sure which is better.

- How do you decide how to distribute the treatments to incoming patients in a way that, in the aggregate, minimizes harm and maximizes good?

Confronted with exactly this scenario, William Thompson got to thinking, and he decided to model this problem using the standard statistical tools of the time. He first remarks in [3]:

“From this point of view there can be no objection to the use of data, however meagre, as a guide to action before more can be collected”

In other words, we don’t have a lot of data, data is expensive and/or time-consuming to get, but we want to see what we can do with what we’ve got.

The natural objection to this is that with a tiny number of samples you can’t really be sure of anything. To which Thompson replies:

“Indeed, the fact that such objection can never be eliminated entirely – no matter how great the number of observations – [suggests] the possible value of seeking other modes of operation than that of taking a large number of observations before analysis or any attempt to direct our course”

Essentially – yeah, that’s true, but there’s never a time when that’s not going to be true. So we can’t be 100% certain, does that mean we should give up trying to improve until we’ve done a bunch of experiments? That sounds slower and potentially more harmful than an approach that continuously improves along the way. Thompson’s proposed method for doing this is the following [1]:

- Select between A and B proportionally to their expected benefit (at the start of the trial you can assume they’re equal).

- Continually update our beliefs after each trial using Bayes’ theorem.

So, if we think there’s an 80% chance A is better than B and we get a new patient, we give them treatment A with an 80% probability. We then wait and observe the outcome, and based on that we use Bayes’ theorem to update our belief about the effectiveness of A relative to B.

Comparison To Other Strategies

What follows in [3] is quite a bit of math, but what’s really important is how this approach stacks up against the two biggest contenders. Assuming we’re running a trial with 100 patients, let’s examine more closely (this is adapted from the great presentation in [4]).

The first contender: if we’re 80% confident treatment A is better than B, why not just give every patient treatment A? This may, indeed, be an acceptable solution, but there’s still a 20% chance we’re wrong, and in that case all 100 patients get the worse treatment. So long as we’re willing to live with a permanent regret of n(1 – p) = 100 * (1 – 0.80) = 20, this may be an acceptable solution.

The second contender: why not give 50% of the patients treatment A and 50% of the patients treatment B? Again, this may indeed be an acceptable solution, so long as we’re willing to live with 0.5n = 0.5 * 100 = 50 regret – that means 50 of our 100 patients getting the worse treatment.

How about with Thompson Sampling? So in this approach we give out treatment A with 80% probability and we continuously update this probability based on the observed outcomes. In the 80% case, this means we have a temporary regret of pn(1 – p) + (1 – p)np = 2np(1 – p) = 2 * 100 * 0.80 * 0.20 = 32. While this is initially worse than the first approach considered, we are continually gaining feedback along the way. This means our regret should tend to decrease as we observe more outcomes and more patients should eventually receive the better treatment. Not to mince words: we are sacrificing some early patients, in order to learn and gain feedback, to improve outcomes for later patients.

Application To Interviews

With a little bit of imagination and some squinting, we can begin to see how this could be applied to interviews. Let’s assume our interview process is something like the following:

- A candidate comes in.

- They go through 4 interviews.

- Each interviewer ranks the candidate with a yes or a no.

Mapping this into the formula from the medical trial above:

- We have two candidates A and B.

- We’re not sure which is better.

- How do we choose between them in such a way that we maximize our chances of getting the better candidate?

If we consider each interview to be a single trial, then we can use our yes’s and no’s to compare the quality of candidates. We can then create a graph and use that graph to rank candidates. So, what does this look like?…

Prototyping The Process

I’ve created a sample project on github as a proof-of-concept.

The program takes 5 potential candidates and sends them through a mock interview process. Each candidate undergoes 4 interviews. After each interview they are given a Yes (Should hire) or No (Should not hire) by the interviewer. The probability of a Yes is proportional to the candidates “quality” – a hypothetical objective measure of how qualified the individual is for the position. Below is a sample run of the program:

[EXPECTED] 0: 0.100000 1: 0.200000 2: 0.300000 3: 0.400000 4: 0.500000 Conducting interviews... [ACTUAL] 0: 0.100000 - 0 4 0 1 - 0 4 3 1 - 0.023810 0 2 - 0 4 1 3 - 0.222222 0 3 - 0 4 4 0 - 0.003968 0 4 - 0 4 2 2 - 0.083333 1: 0.200000 - 3 1 1 0 - 3 1 0 4 - 0.976190 1 2 - 3 1 1 3 - 0.896825 1 3 - 3 1 4 0 - 0.222222 1 4 - 3 1 2 2 - 0.738095 2: 0.300000 - 1 3 2 0 - 1 3 0 4 - 0.777778 2 1 - 1 3 3 1 - 0.103175 2 3 - 1 3 4 0 - 0.023810 2 4 - 1 3 2 2 - 0.261905 3: 0.400000 - 4 0 3 0 - 4 0 0 4 - 0.996032 3 1 - 4 0 3 1 - 0.777778 3 2 - 4 0 1 3 - 0.976190 3 4 - 4 0 2 2 - 0.916667 4: 0.500000 - 2 2 4 0 - 2 2 0 4 - 0.916667 4 1 - 2 2 3 1 - 0.261905 4 2 - 2 2 1 3 - 0.738095 4 3 - 2 2 4 0 - 0.083333

Under the “[EXPECTED]” headline are a list of candidate ID to objective quality. The candidates undergo interviews. Under actual we print out each candidates ID, objective quality, and the number of yeses and nos. We then show an adjacency list of their performance relative to the others is shown.

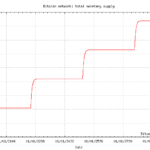

A graph of the probabilities of various candidates is shown below. The most qualified candidate based on Thompson Sampling is in green (C3), the worst is in red (C0). This matches up fairly well to the expected worst (C0) and expected best (C4).

I think this would be interesting to apply as one source of signal during the interview process, especially when making final decisions between candidates.

References

[1]: D. Brice. PapersWeLove LA Meetup on Thomspon Sampling. 2017-02-22.

[2]: O. Chapelle, L. Li. “An Empirical Evaluation of Thompson Sampling”. 1933.

[3]: W. Thompson. “On the likelihood that one unknown probability exceeds another in view of the evidence of two samples”. 2011.

[4] Regret (decision theory). https://en.wikipedia.org/wiki/Regret_(decision_theory)