Getting Circular With SDL Audio

(Image Wikipedia Commons)

Recently I’ve been having a lot of fun following along with Casey Muratori’s Handmade Hero project. As far as I know this is the first time a seasoned game industry vet like Casey has graciously decided to share the step-by-step creation of a professional-quality game. Amazingly, he’s also been including videos detailing every line of code and every major architectural decision.

However, following along with the code examples can be tough if you’re not on a Windows machine. In my case, early on I decided to replace the DirectX dependencies with Simple DirectMedia Layer (SDL). SDL is a library I’ve used for years and have found to be a reliable tool for building audio/visual applications and games across Windows, macOS, and Linux.

Unfortunately, it seems to be a rule that no two interfaces to graphics or sound cards can quite function the same way. This means that mapping DirectX code onto the SDL APIs can sometimes require quite a bit of leg work.

In particular, episodes 008 and 009 where Casey shows us how to play a square and sine wave using DirectAudio have no immediate equivalents in SDL. However, with some work it is easily possible to translate them into an SDL equivalent, and even set ourselves up for a multithreaded implementation.

We’ll start with some background on how computer sound generation works in general, then move on to SDL specifics, and end with an the implementation of a circular buffer for async audio playback in SDL.

Computer Audio Basics

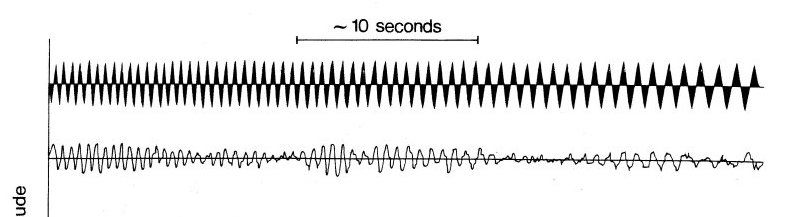

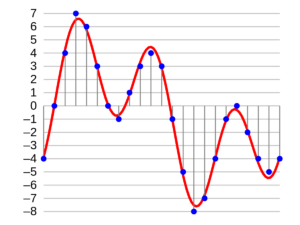

Almost all digital devices generate audio using some variant of a method known as Linear Pulse-code Modulation (LPCM). LPCM generates sound from a digital signal by streaming a set of quantized digital values representing amplitudes from memory out to an analog speaker. The sound card samples from this stream at a regular interval, called the sampling rate, and – if sampled fast enough – can generate any sound you want within its tolerances. In the image to the left you can see how such sampling approaches a continuous analog signal – the more dots (LPCM samples) we can squish into a given span of time, the higher the fidelity to the original signal.

LPCM signals are typically digitally represented by 8, 16, 24, or 32 bit values (called the bit depth of the signal). Samples can also be monoaural (1 channel) or stereo (2 channels). Depending on the number of channels, the sound card will either grab 1 or 2 values per sample. With a monoaural sample, it will one value and mirror it on both speakers. With a stereo setup it will grab two values – one for the left speaker and one for the right speaker. The table below summarizes this point (assuming a 16-bit depth):

[table id=4 /]

SDL Audio

With these basics explained, SDL’s audio subsystem becomes much more approachable. Initialization requires some hopefully familiar information (Full documentation on SDL_OpenAudioDevice here):

SDL_AudioSpec RequestedSettings = {};

RequestedSettings.freq = 48000; // Our sampling rate

RequestedSettings.format = AUDIO_S16; // Use 16-bit amplitude values

RequestedSettings.channels = 2; // Stereo samples

RequestedSettings.samples = 4096; // Size, in samples, of audio buffer

RequestedSettings.callback = &FillAudioDeviceBuffer; // Function called when sound device needs data

RequestedSettings.userdata = &AudioSettings; // Pass in data structure we'll use to communicate audio config

SDL_AudioSpec ObtainedSettings = {};

SDL_AudioDeviceID DeviceID = SDL_OpenAudioDevice(

NULL, 0, &AudioSettings, &ObtainedSettings, 0

);

// Start music playing

SDL_PauseAudioDevice(DeviceID, 0);

We can then write our callback to place raw LPCM samples into the audio buffer whenever it is called. The following example will play a square wave:

void

FillAudioDeviceBuffer(void* UserData, Uint8* DeviceBuffer, int Length)

{

platform_audio_settings* AudioSettings = (platform_audio_settings*)UserData;

Sint16* SampleBuffer = (Sint16*)DeviceBuffer;

int SamplesToWrite = Length / AudioSettings->BytesPerSample;

for (int SampleIndex = 0;

SampleIndex < SamplesToWrite;

SampleIndex++)

{

// Generate a square wave at the given period to produce tone

Sint16 SampleValue = AudioSettings->ToneVolume;

if ((AudioSettings->SampleIndex / AudioSettings->WavePeriod) % 2)

{

SampleValue = -AudioSettings->ToneVolume;

}

*SampleBuffer++ = SampleValue; // Left channel value

*SampleBuffer++ = SampleValue; // Right channel value

AudioSettings->SampleIndex++; // Count as 1 sample

}

}

Our platform_audio_settings data structure would look something like the following:

struct platform_audio_settings

{

int SamplesPerSecond;

int BytesPerSample;

int SampleIndex;

int ToneHz;

int ToneVolume;

int WavePeriod;

};

// Configure audio settings for sample generation

platform_audio_settings AudioSettings = {};

AudioSettings.SamplesPerSecond = 48000; // Sample rate in Hz

AudioSettings.BytesPerSample = 2 * sizeof(Sint16); // 16-bit audio depth, 2 channels

AudioSettings.SampleIndex = 0;

AudioSettings.ToneVolume = 3000;

AudioSettings.ToneHz = 262; // Approximately middle C

// Wave period in samples

AudioSettings.WavePeriod = AudioSettings.SamplesPerSecond / AudioSettings.ToneHz;

All of this looks pretty familiar and straightforward given our explanation of LPCM above. However, there is one big problem with this, and with SDL’s audio system in general. The use of a callback method to fill the audio buffer is quite different from the circular buffer design of DirectX. This difference is large enough that it makes mapping the Handmade Hero examples onto SDL fairly tricky.

To bridge this gap, it would be helpful if we could simply mimic the circular buffer of DirectX by hand and play its contents out from within the SDL callback.

Circular Audio Buffers

There is an additional benefit to using a circular buffer that makes it particularly worth pursuing. A circular buffer gives us a clean separation of the platform independent audio components (buffer writing) from the platform dependent audio components (buffer reading / audio playback). Before we hop into the implementation, let’s review circular audio buffers to get a sense for what we’ll be writing.

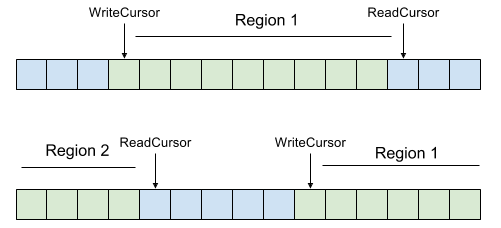

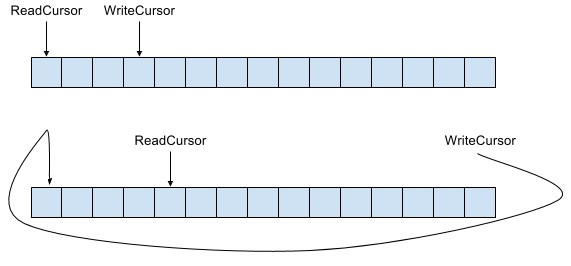

A circular audio buffer is a chunk of memory along with two pointers into that memory – a ReadCursor and a WriteCursor. These cursors are initialized such that the WriteCursor is slightly ahead of the read cursor (typically by 1 sample). This necessary to completely initialize the buffer on the first writing pass, and also simplifies the logic of the actual implementation. If you’d like to explore this more, experiment with starting the ReadCursor and WriteCursor off and the same position and listen to what happens to the audio playback as a consequence.

What makes such a buffer “circular” is that either pointer will simply wrap around and start from the beginning should it ever reach while it is doing its thing (reading or writing). This allows you to maintain the illusion of a continuous “stream” data within a fixed size chunk of memory.

Audio playback simply copies as much data as it needs starting from the ReadCursor. When it is done it advances the ReadCursor to the position following the byte it last read.

Audio writing works similarly, but starts from the WriteCursor and fills the buffer with data up until the ReadCursor. As Casey showed, this can produce two separate cases: (1) The write cursor does not roll past the end of the buffer (2) The write cursor rolls past the end of the buffer. In the first case, we can simply write one contiguous array of memory. In the second, we have to write into two separate contiguous arrays of memory, the first from the current WriteCursor position until the end of the buffer, and the second from the beginning of the buffer until the ReadCursor.

SDL Audio With A Circular Buffer

To implement such a circular audio buffer in SDL, it would first help to revisit our callback. Assuming we had some UserData that provided us a link to the circular buffer, how would we feed that to the sound device from the callaback. The following is one possible way:

internal void

FillAudioDeviceBuffer(void* UserData, Uint8* DeviceBuffer, int Length)

{

platform_audio_buffer* AudioBuffer = (platform_audio_buffer*)UserData;

// Keep track of two regions. Region1 contains everything from the current

// PlayCursor up until, potentially, the end of the buffer. Region2 only

// exists if we need to circle back around. It contains all the data from the

// beginning of the buffer up until sufficient bytes are read to meet Length.

int Region1Size = Length;

int Region2Size = 0;

if (AudioBuffer->ReadCursor + Length > AudioBuffer->Size)

{

// Handle looping back from the beginning.

Region1Size = AudioBuffer->Size - AudioBuffer->ReadCursor;

Region2Size = Length - Region1Size;

}

SDL_memcpy(

DeviceBuffer,

(AudioBuffer->Buffer + AudioBuffer->ReadCursor),

Region1Size

);

SDL_memcpy(

&DeviceBuffer[Region1Size],

AudioBuffer->Buffer,

Region2Size

);

AudioBuffer->ReadCursor =

(AudioBuffer->ReadCursor + Length) % AudioBuffer->Size;

}

As you can see this handles both cases when reading from the buffer, copying two separate regions if necessary. SDL_memcpy is smart enough to know to do nothing if we request it copy 0 bytes.

Writing to the buffer must also deal with the Region1 and Region2 logic. We can, however, generalize the idea of playing a sample to fill the circular buffer, isolating a lot of the logical complexity:

internal void

SampleIntoAudioBuffer(platform_audio_buffer* AudioBuffer,

Sint16 (*GetSample)(platform_audio_config*))

{

int Region1Size = AudioBuffer->ReadCursor - AudioBuffer->WriteCursor;

int Region2Size = 0;

if (AudioBuffer->ReadCursor < AudioBuffer->WriteCursor)

{

// Fill to the end of the buffer and loop back around and fill to the read

// cursor.

Region1Size = AudioBuffer->Size - AudioBuffer->WriteCursor;

Region2Size = AudioBuffer->ReadCursor;

}

platform_audio_config* AudioConfig = AudioBuffer->AudioConfig;

int Region1Samples = Region1Size / AudioConfig->BytesPerSample;

int Region2Samples = Region2Size / AudioConfig->BytesPerSample;

int BytesWritten = Region1Size + Region2Size;

Sint16* Buffer = (Sint16*)&AudioBuffer->Buffer[AudioBuffer->WriteCursor];

for (int SampleIndex = 0;

SampleIndex < Region1Samples;

SampleIndex++)

{

Sint16 SampleValue = (*GetSample)(AudioConfig);

*Buffer++ = SampleValue;

*Buffer++ = SampleValue;

AudioConfig->SampleIndex++;

}

Buffer = (Sint16*)AudioBuffer->Buffer;

for (int SampleIndex = 0;

SampleIndex < Region2Samples;

SampleIndex++)

{

Sint16 SampleValue = (*GetSample)(AudioConfig);

*Buffer++ = SampleValue;

*Buffer++ = SampleValue;

AudioConfig->SampleIndex++;

}

AudioBuffer->WriteCursor =

(AudioBuffer->WriteCursor + BytesWritten) % AudioBuffer->Size;

}

We can also cook up a simple data structure to contain the necessary fields supporting these two methods:

struct platform_audio_config

{

int ToneHz;

int ToneVolume;

int WavePeriod;

int SampleIndex;

int SamplesPerSecond;

int BytesPerSample;

};

struct platform_audio_buffer

{

Uint8* Buffer;

int Size;

int ReadCursor;

int WriteCursor;

platform_audio_config* AudioConfig;

};

Now all we need to do is define some sampling functions we can use to feed our buffer. Let’s do one for the square wave and one for the sine wave:

static float PI = 3.14159265359;

static float TAU = 2 * PI;

///////////////////////////////////////////////////////////////////////////////

internal Sint16

SampleSquareWave(platform_audio_config* AudioConfig)

{

int HalfSquareWaveCounter = AudioConfig->WavePeriod / 2;

if ((AudioConfig->SampleIndex / HalfSquareWaveCounter) % 2 == 0)

{

return AudioConfig->ToneVolume;

}

return -AudioConfig->ToneVolume;

}

///////////////////////////////////////////////////////////////////////////////

internal Sint16

SampleSineWave(platform_audio_config* AudioConfig)

{

int HalfWaveCounter = AudioConfig->WavePeriod / 2;

return AudioConfig->ToneVolume * sin(

TAU * AudioConfig->SampleIndex / HalfWaveCounter

);

}

Now, in the main loop of our program we just regularly write data into the audio buffer. As a side note, we need to avoid race conditions by locking our audio device to ensure the audio callback isn’t executed until the buffer writing is done:

SDL_LockAudioDevice(DeviceID); SampleIntoAudioBuffer(AudioBuffer, &SampleSineWave); SDL_UnlockAudioDevice(DeviceID);

Conclusion

You can find a full working example here.

This was a lot of fun to write and occasionally quite frustrating to debug, however it forced me to finally learn about LPCM and SDL Audio in the process. Thank you for reading along if you made it this far. As always if you have any questions / comments / concerns, feel free to post